- Introduction

In real-world applications, incremental data are often partially labeled.

ex) Face Recognition, Fingerprint Identification, Video Recognition

→ Semi-Supervised Continual Learning: Insufficient supervision and large amount of unlabeled data.

SSCL 에선 기존 CL 에서 사용하던 regularization-based method, replay-based method 가 잘 작동하지 않음 (왜 Architecture-based method 를 뺐는지는 모르겠음)

다만, Joint training 에서 strong semi-supervised classifier 는 significantly outperform

→ Catastrophic forgetting of unlabeled data problem in SSCL

► Online replay with discriminator consistency (ORDisCo): Continually learns the classifier with GAN

Replay data is sampled from the conditional generator to the classifier in an online manner.

Main Contributions

- Semi-supervised Continual learning

- ORDisCo: classifier & conditional GAN

- Various benchmarks

- Method

Problem Formulation: Labeled & Unlabeled data for each task data with CIL setting (No task label)

Conditional Generation on Incremental Semi-supervised Data

Triple-network structure which learns a classifier with conditional GAN (from SSL GAN sota)

Classifier $\mathit{C}$, Generator $\mathit{G}$, Discriminator $\mathit{D}$

Classifier Loss

$\mathit{L}_{\mathit{C},pl}(\theta_\mathit{C}) = \mathit{L}_{sl}(\theta_\mathit{C})+\mathit{L}_{ul}(\theta_\mathit{C})$

Discriminator Loss

$\mathit{L}_{\mathit{D},pl}(\theta_\mathit{D}) = \mathbb{E}_{x,y\sim b_l\cup smb}[\log{(D(x,y))}] +\alpha \mathbb{E}_{y'\sim p_{y'}, z \sim p_z}[\log{(1-D(G(z,y'),y'))}]$

$+(1-\alpha) \mathbb{E}_{x\sim bu}[\log{(1-D(x,\mathit{C}(x))}]$

Generator Loss

$\mathit{L}_{\mathit{G}}(\theta_\mathit{G}) = \mathbb{E}_{y'\sim p_{y'}, z \sim p_z}[\log{(1-D(G(z,y'),y'))}] $

Improving Continual Learning of Unlabeled Data (Forgetting 완화 방법)

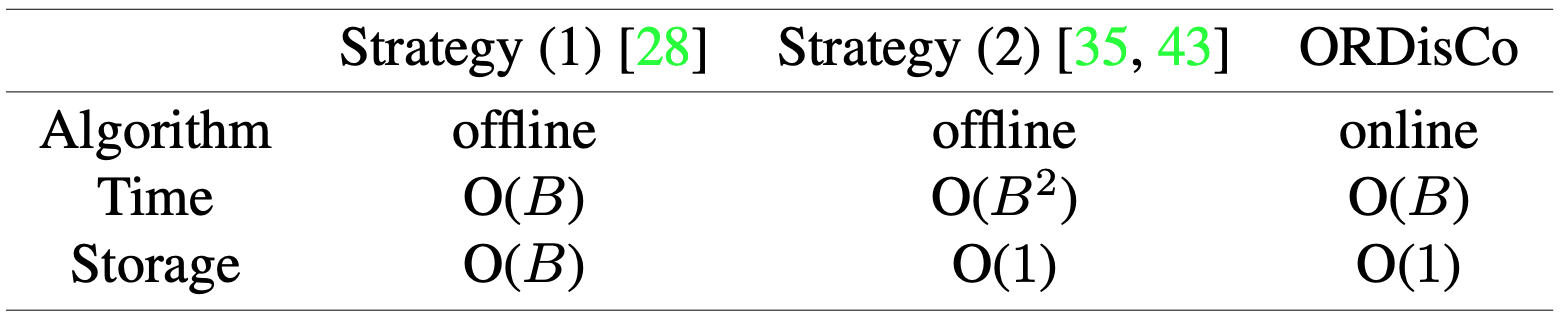

(1) Online semi-supervised generative replay

Offline generative replay

- All old generators are saved and conditional samples are replayed for the classifier

- Each current task generator is saved

ORDisCo

- Generate samples from $\mathit{G}$ and replay them to $\mathit{C}$

- Time and storage efficient

Classifier Loss

$\mathit{L}_{\mathit{C}}(\theta_\mathit{C})=\mathit{L}_{\mathit{C},pl}(\theta_\mathit{C}) + \mathit{L}_{\mathit{G}\to \mathit{C}}(\theta_\mathit{C})$

Generator Loss

$\mathit{L}_{\mathit{G}\to \mathit{C}}(\theta_\mathit{C})=\mathbb{E}_{y'\sim p_{y'}, z \sim p_z}[\log{(1-D(G(z,y'),y'))}]$

$+\mathbb{E}_{y'\sim p_{y'},z\sim p_z, \epsilon,\epsilon '} [\left\|\mathit{C}(\mathit{G(x,y'),\epsilon }) -\mathit{C}(\mathit{G(x,y'),\epsilon '})\right\|]$

(2) Stabilization of discrimination consistency

Discriminator Loss

$\mathit{L}_{\mathit{D}}(\theta_\mathit{D})=\mathit{L}_{\mathit{D},pl}(\theta_\mathit{D}) + \lambda \sum_{i}^{}\xi _{1:b,i}(\theta_{\mathit{D},i}-\theta_{\mathit{D},i}^*)^2$

2nd term 은 Parameter Regularization term

- Experiment

Setting

- New Instance[2]: All classes are shown in the first batch while subsequent instances of known classes become available over time

- New Class: For each sequential batch, new object classes are available so that the model must deal with the learning of new classes without forgetting previously learned ones

Dataset

- SVHN

- CIFAR10

- Tiny-ImageNet

New Instance: 30/30/10 batches with small amout of labels to each batch

New Class: Same split with New Instance, but 5 binary classification tasks

Architecture

- Wide ResNet

Baseline

- Mean teacher (MT)

- Supervised Memory Buffer (SMB)

- Unsupervised Memory Buffer (UMB)

- Unified Classifier (UC)

New Instance

New Class

- Discussion

확실히 2021 논문이기도 하고 SSCL 을 처음 제안하다보니, 최근 트렌드와는 조금 다른 setting 들이 보인다.

최근 트렌드에 맞게 multi-task classification 및 일반적인 CIL setting 이 필요해보인다.

- Reference

[1] Wang, Liyuan, et al. "Ordisco: Effective and efficient usage of incremental unlabeled data for semi-supervised continual learning." CVPR 2021 [Paper link]

[2] Parisi, German I., et al. "Continual lifelong learning with neural networks: A review." Neural networks 2019 [Paper link]

'Paper Review > Continual Learning (CL)' 카테고리의 다른 글

| [CVPR 2023] CODA-Prompt: COntinual Decomposed Attention-based Prompting for Rehearsal-Free Continual Learning (0) | 2023.03.27 |

|---|---|

| [CVPR 2020] Few-Shot Class-Incremental Learning (0) | 2023.03.12 |